Using AI to generate articles from scratch has a couple of draw backs.

- Irrelevant content - The AI may go off tangent and cover irrelevant things

- Hallucinations - The AI will make up facts and generate incorrect statements about things

We can solve this problem by providing content from the top ranking articles in Google and use that to guide the AI model.

This technique is also known as ‘retrieval augmented generation’ or RAG for short.

You can read more about it here as explained by OpenAI

We give the LLM the relevant content and it uses that content to generate a response.

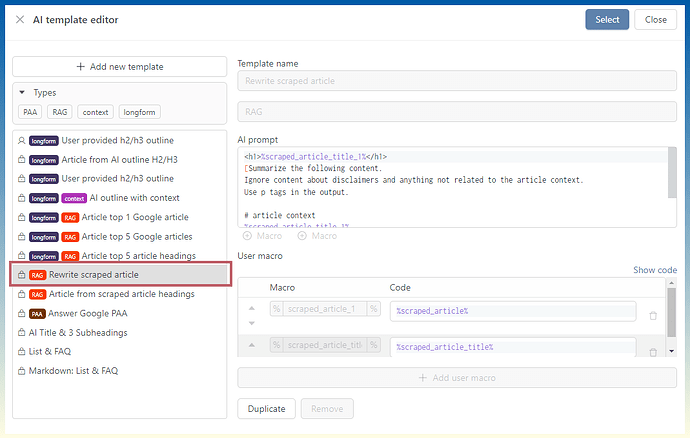

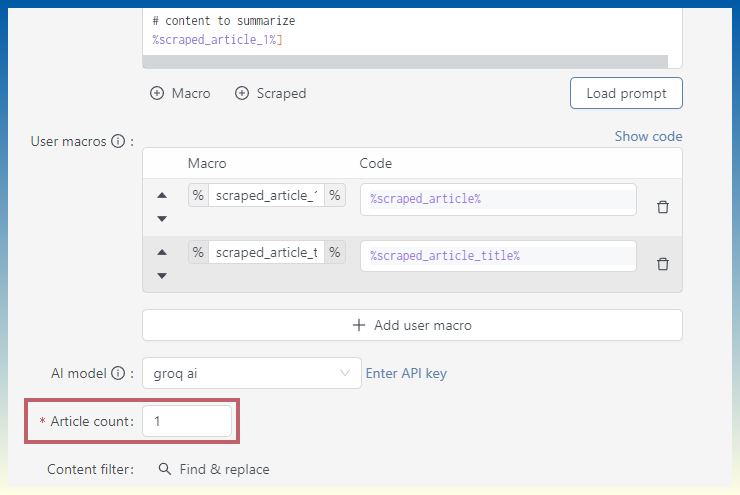

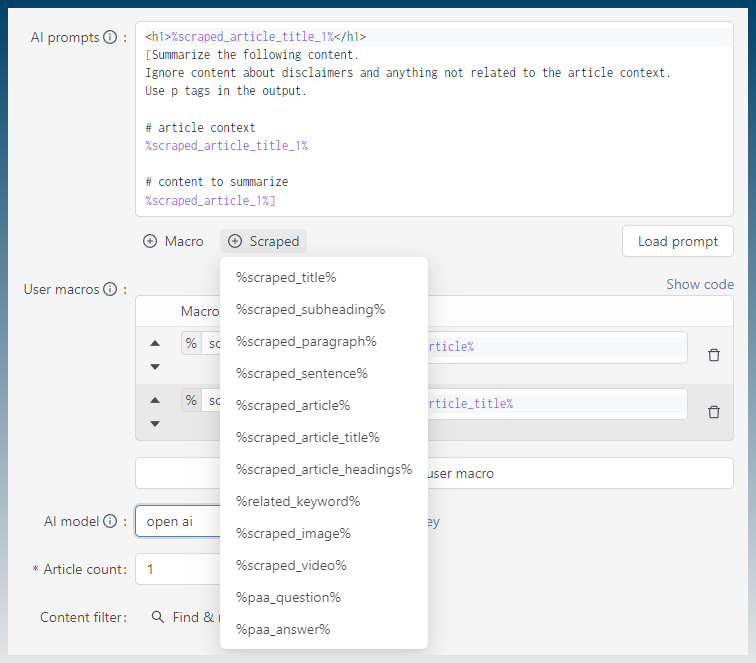

With SCM this is super easy to do because we have access to 2 special macros

- %scraped_article%

- %scraped_article_title%

How to augment AI content generation with scraped content

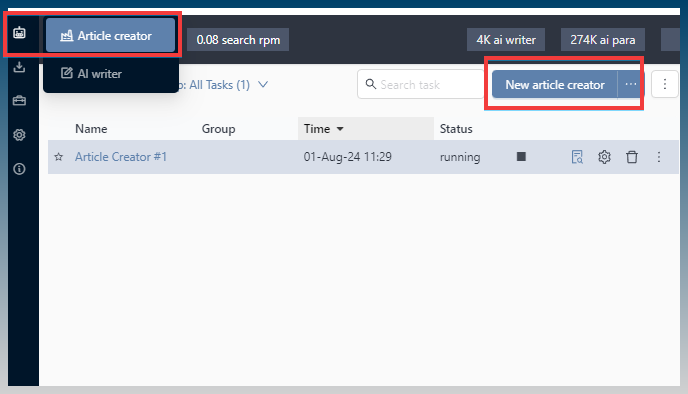

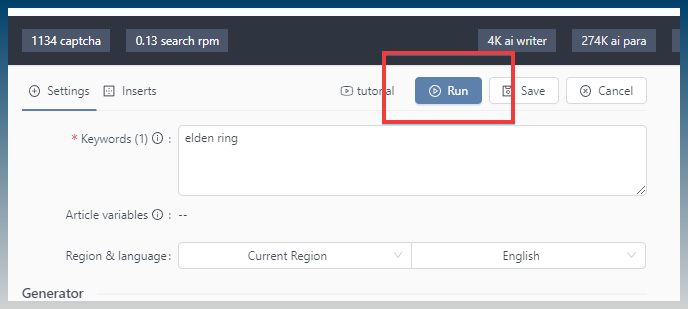

Create a new Article creator task

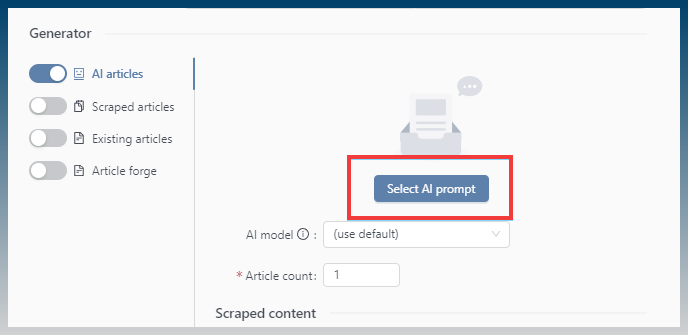

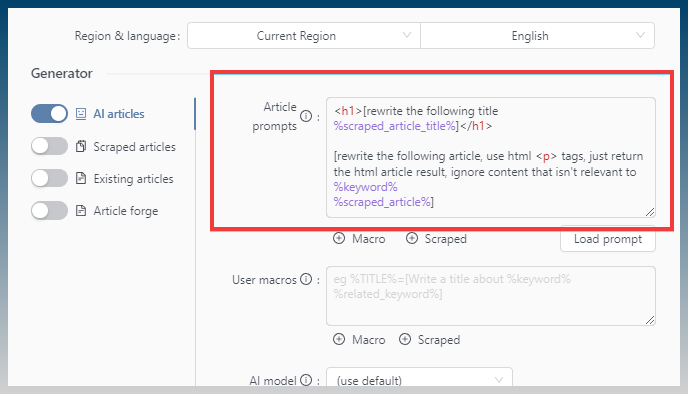

Select AI prompt

Click on ‘Rewrite scraped article’ template

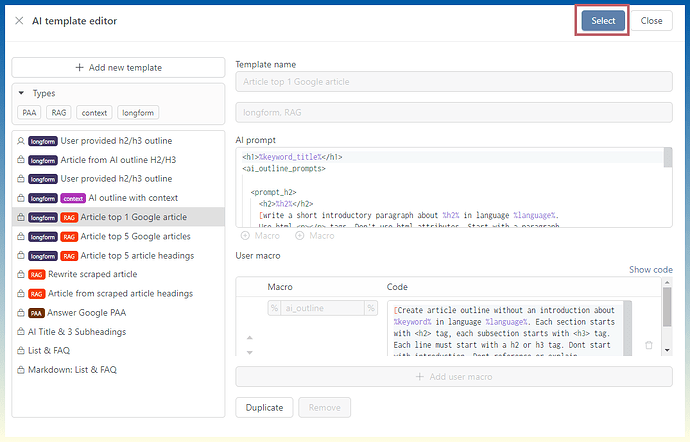

Click ‘Select template’ to load it

Enter number of articles to create

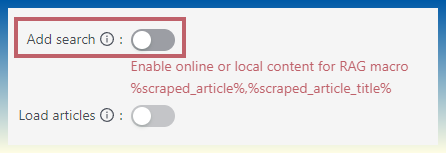

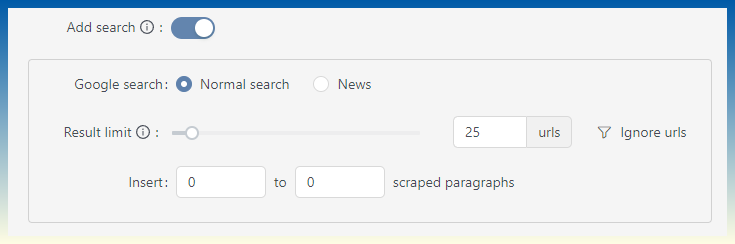

Enable ‘Add search’

Download more results than you need to account for dead and missing sites.

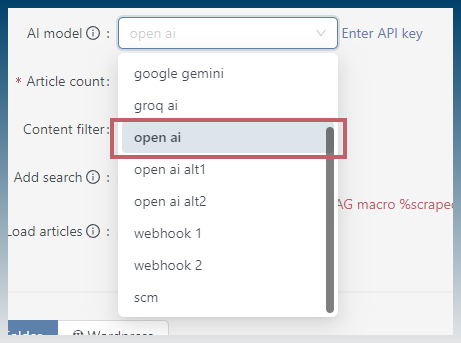

Select an AI model with a large context window, like GPT4.

The more tokens they accept in the prompt, the more information the model can retain in memory.

As new models are released, the context windows are getting larger allowing for better information retention.

This is good for us, we can give it larger articles to work with.

Click ‘RUN’

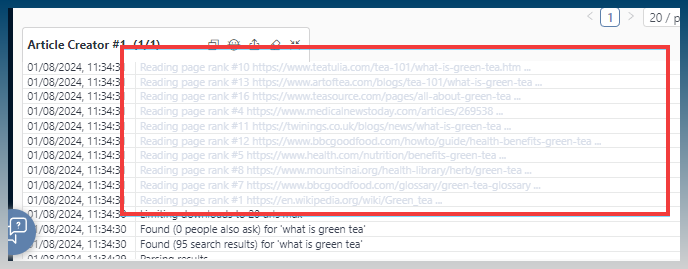

Output

Here we can see what pages are being downloaded from Google.

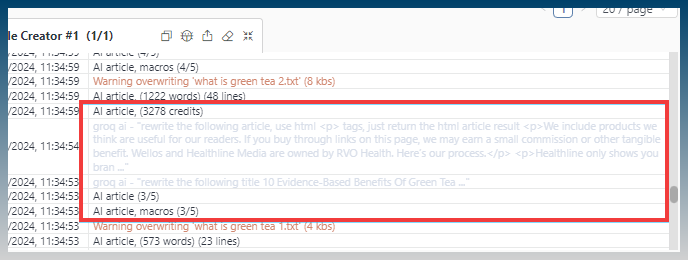

As each article is sent to the AI service, you can see a preview of the content being sent.

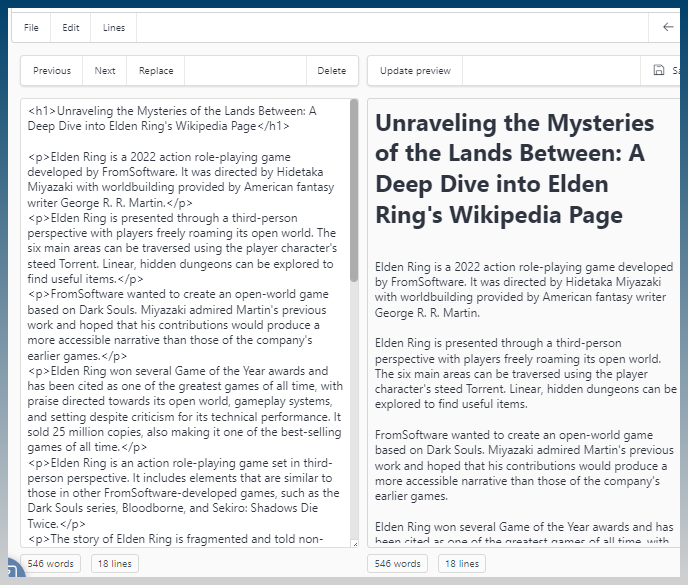

Preview the results, click on the blue magnify button.

Preview the text and html.

Macros explained

RAG is made possible by the %scraped_article% macro.

You can use it by pasting it into your prompts.

You should put the %scraped_article% macro on a new line to give the AI model a hint on where instructions end and content begins.

Where does %scraped_article% content come from?

For simplicity it is just the <p> contents from a web page.

This is enough to give the AI model plenty of context.

How does %scraped_article% work?

Every time SCM sees a %scraped_article% macro, it will load the article content from the next site in its list.

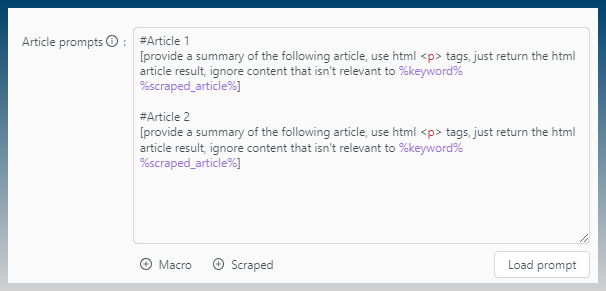

If you use %scraped_article% x 2, you can give the AI model access to 2 different articles at the same time.

But what if you want to use the same scraped article multiple times?

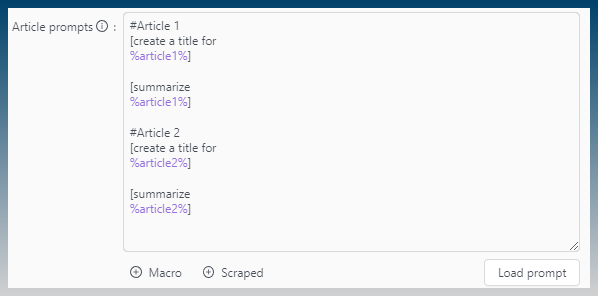

How to refer to a specific %scraped_article%

Each time SCM sees %scraped_article%, it will load a new article into your prompt.

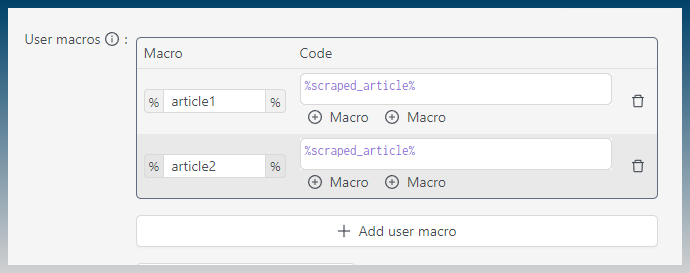

However you can save the contents of one article to a macro so it can be re-used multiple times.

We can pin the output of %scraped_article% by using the user macros section.

We pin the output of %scraped_article% to %article1% and %article2%

Now we can use %article1% or %article2% without advancing to the next articles in the list.

Eg: %article1% can be used to generate a title, and then again to create a summary.

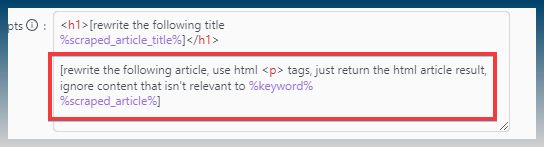

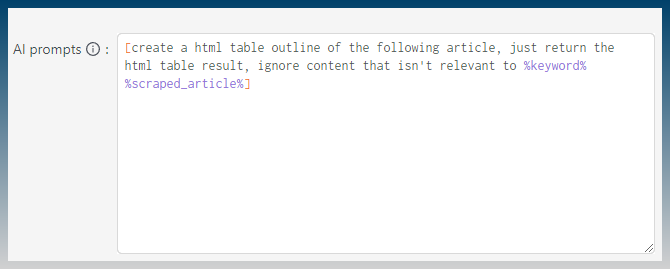

Prompt editing

You can edit the prompt to send additional instructions to the AI model.

eg: Create a HTML table

[create a html table outline of the following article, just return the html table result, ignore content that isn't relevant to %keyword%

%scraped_article%]

It can generate HTML tables like so…

Summary

- We use RAG to avoid problems with hallucination, ie AI creating content about facts that don’t exist

- We use

%scraped_article%macro in AI prompts to give it context %scraped_article%is the content from the top articles in Google search- Everytime SCM sees

%scraped_article%, it loads content from the next web page it downloaded from Google - We can re-use the same scraped article by using User Macros