There are a lot of new AI models and services offering generated content via APIs.

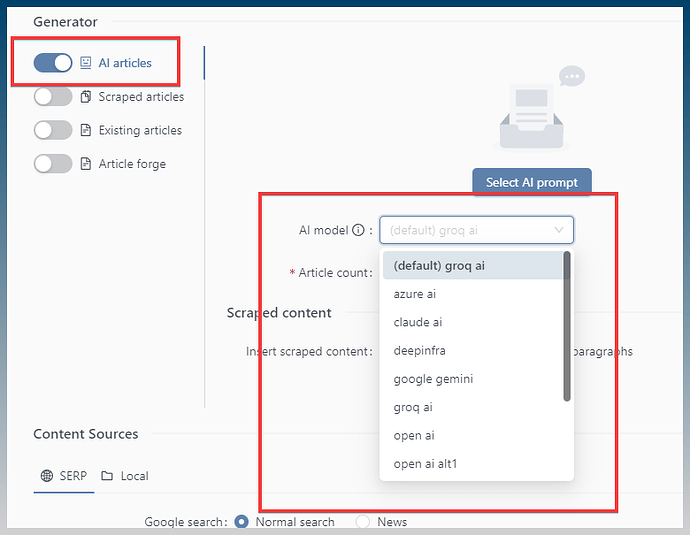

SCM supports popular models such as OpenAI, groq, gemini etc.

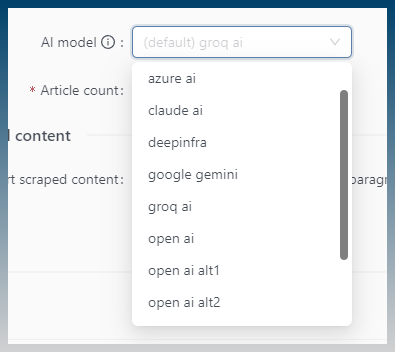

This is the current list of in app supported models.

What happens if a service is not directly supported by SCM?

You can actually use those services right now with 2 solutions.

Solutions:

- Use OpenAI compatible API models

- Use Webhooks inside the Article creator

OpenAI compatible APIs

The first solution is very easy to use.

Most AI models offer an API that emulate the OpenAI API.

We can re-use the OpenAI calling code already present in SCM.

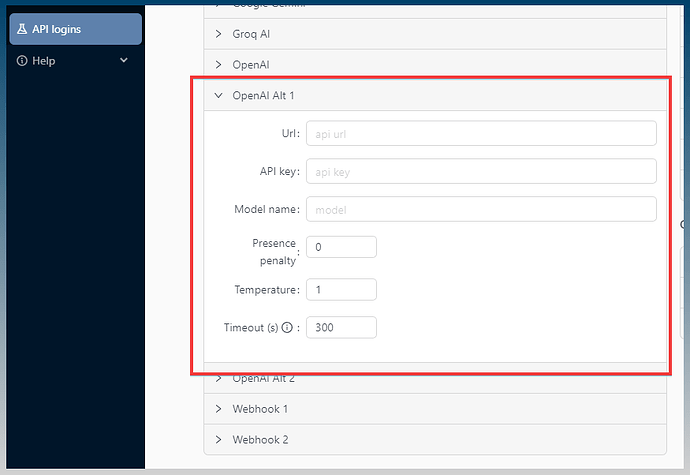

Go to api settings

Use OpenAI Alt 1/2

Provide the api url and api key of the service.

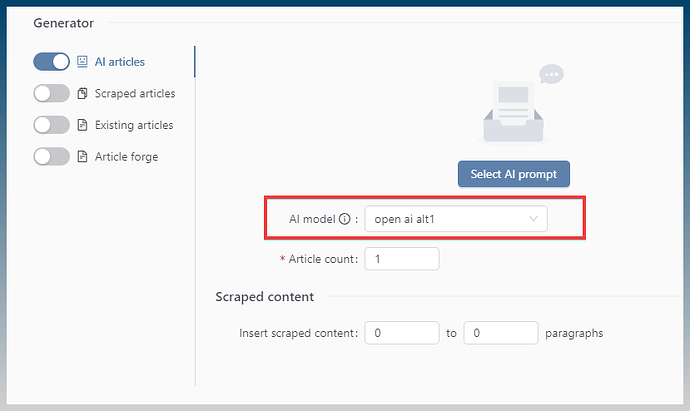

Inside the Article Creator, select your OpenAI Alt as the default service.

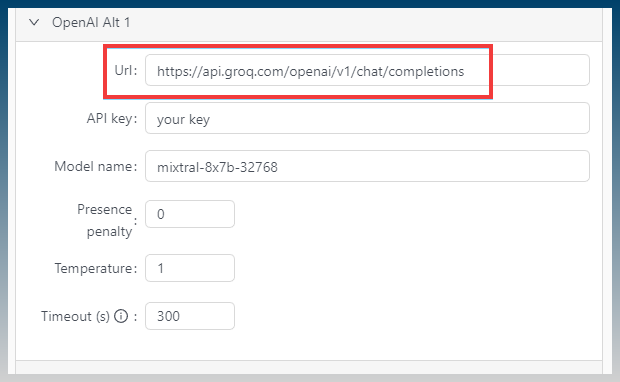

Example

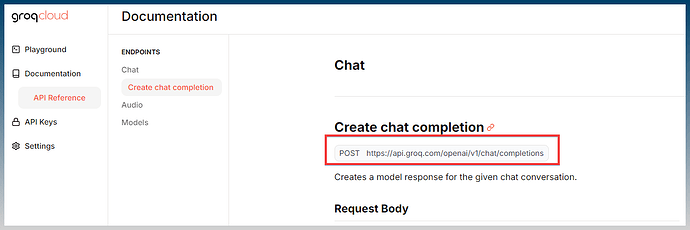

Groq AI emulates OpenAI spec.

Values are taken from groq api docs.

https://console.groq.com/docs/api-reference#chat-create

Now we can use non supported AI models that emulate OpenAI API.

But, what about a service that doesn’t emulate OpenAI?

Using Webhooks to call any service

Add other services via generic Webhooks.

Only JSON Webhooks are supported.

Your service must support JSON calls and must respond with JSON data. Anything else will fail.

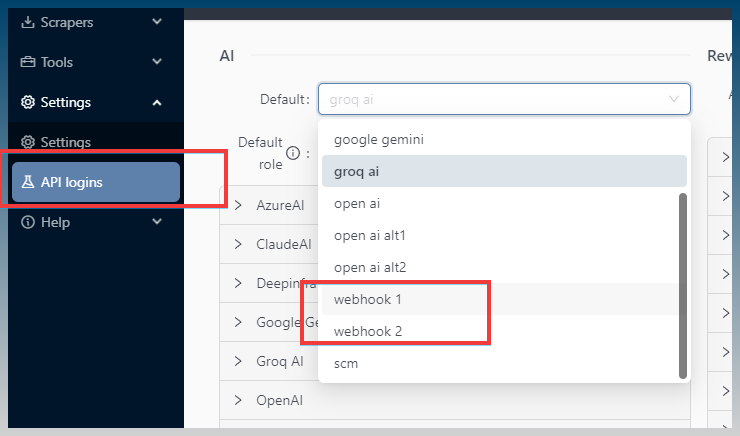

Webhooks are located in api settings.

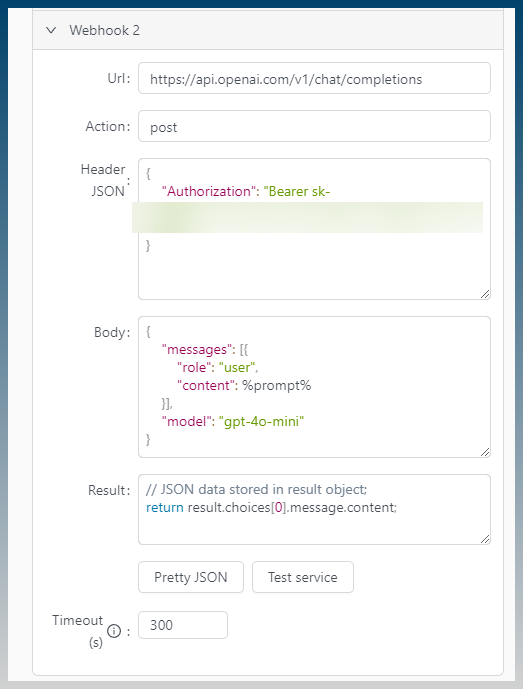

Using OpenAI as an example.

We have full control of what data we send to the AI service.

Results are parsed back using javascript

Webhooks are an advanced feature and requires basic javascript programming knowledge

How to configure Webhooks

1. Enter URL of API service

2. Leave default action to POST

Unless you know its GET etc.

3. Provide authentication

If your service needs authentication, you might need to provide Header > Authorization string

Normally this means providing your API key.

Remember all values must be as JSON object.

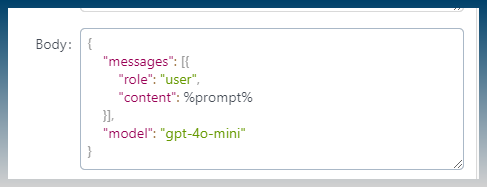

4. Provide JSON body data

This is the data that is passed to the service.

The %prompt% macro comes from the SCM AI prompt.

You can click Pretty JSON to check visually if your inputs look valid.

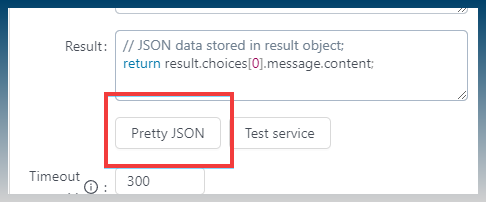

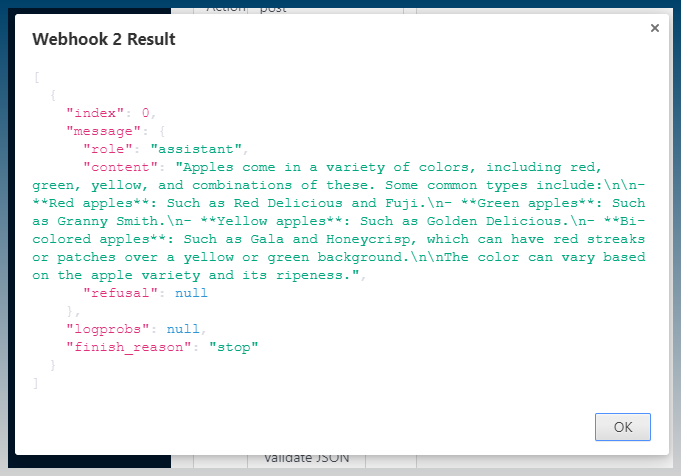

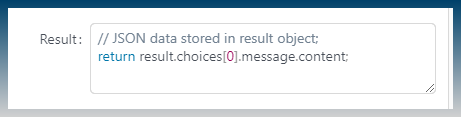

5. Format return data

Webhooks can only return 1 single string value.

Any value that is returned as a JSON object will automatically be converted to a string.

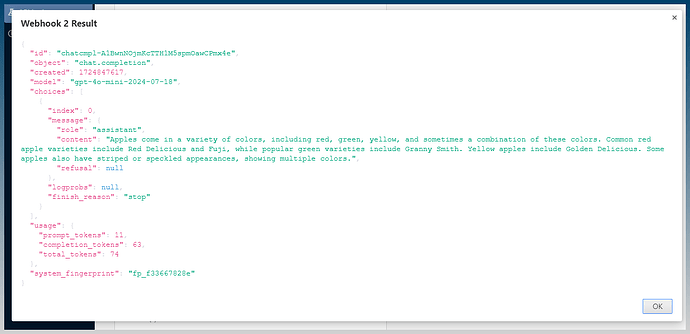

For OpenAI the actual result is buried inside the result message object.

Testing your Webhooks

You can use the Test Service button to verify the result you want.

Continuing the OpenAI example above,

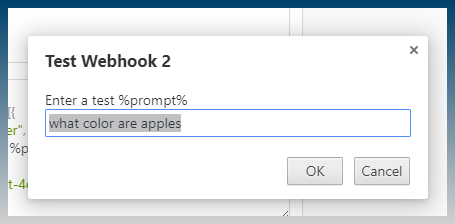

Click Test service

Provide a prompt

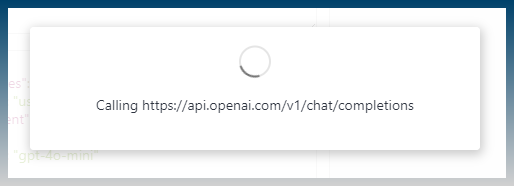

Webhook calls

Immediate result

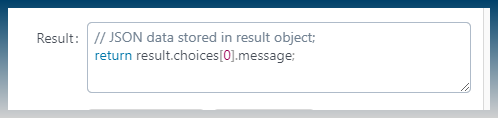

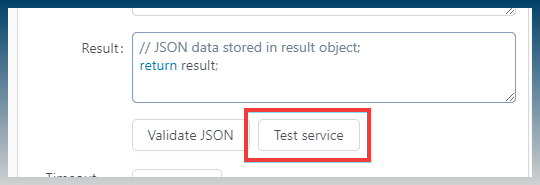

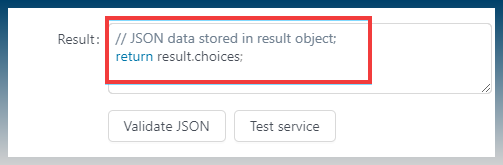

Narrow results by changing result script

Test again

Just get the content

Test

This is now a plain text result.

Its no longer JSON because the color coded JSON properties are gone.

The webhook is ready to be used.

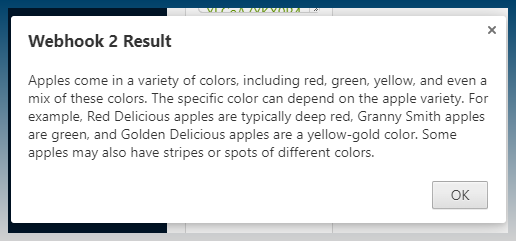

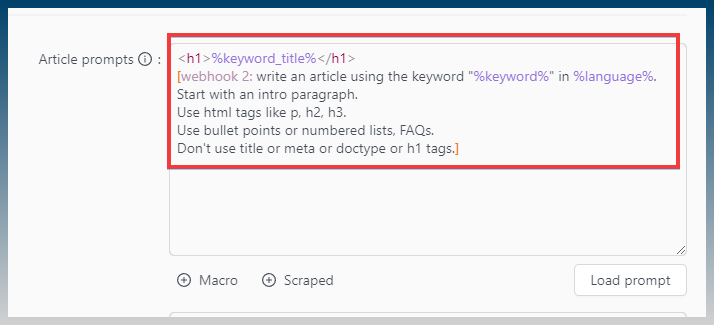

How to call the webhook

- Set it as the AI default

- Set it as the Article Creator task default

- Call it inside a prompt

The name is same as the dropdown, so ‘webhook 1’ or ‘webhook 2’