1. The problem

Google Scraper vs Individual Websites have different Proxy needs. Google Scraper needs mass quantity (100+ proxies) to reliably work because of how quickly Google bans your IP address .. Fortunately, Datacenter proxies are fine, but you need alot of them to rotate for each request. Luckily, they are cheap, and easy to find in bulk.

Individual websites .. you only need a few ISP/Residential Proxies. DataCenter proxies do not work on many individual websites now due to recent Cloudflare BOT protections you can easily add in wordpress. So lately, Many calls to websites are “blocked” from datacenter proxies … resulting so your Google Scraper cannot get logo/email/socials etc. Unfortunately, ISP/Residential proxies are expensive and hard to come by .. but you dont need many since you are visiting different websites. You only need a few to get by Cloudflare’s flooding detection.

2. Simple solution

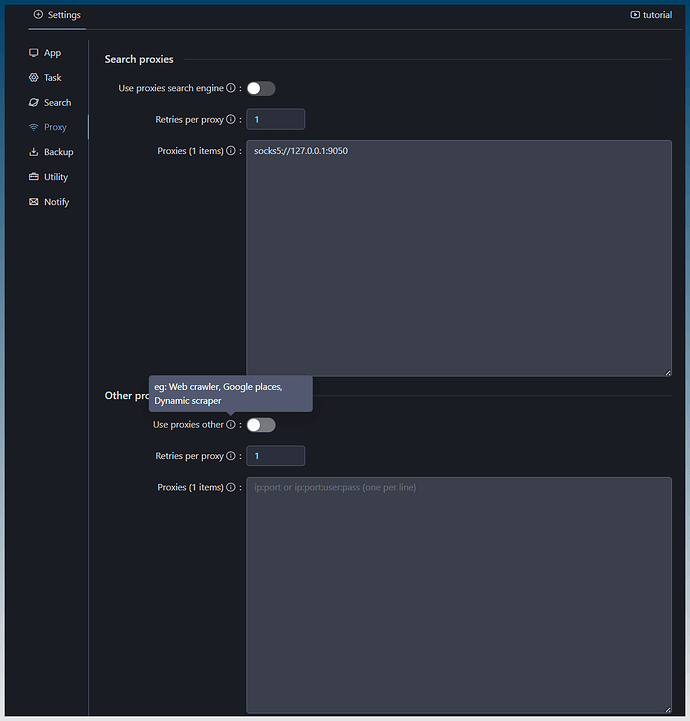

Create Proxy Pools (or Proxy Tags IP:PORT:USER:PASS:TAG, .. tag optional) and allow assignment of pools/tags for “SERPs” vs “Individual websites”

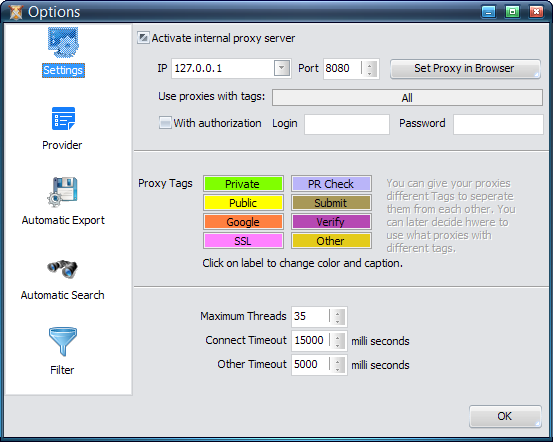

For example. GSA Proxy module (built into just about every GSA software) allows tagging to assign Proxy Tags for different targets .. for this very purpose. (this is where I got the idea from).

But the point is .. it works .. and works well.

If you were to add a similar feature .. then we can easily (and cheaply) work around most bans. If you want to make it easy to program on yourself .. Just add a setting for Google, Bing, Websites with “Allowed Proxy Tags”.