1. The problem

Hi SCM team,

I’m having consistent issues with the Dynamic Page Scraper. It works fine for light or simple HTML pages, but fails on many modern, heavily protected websites.

Here are some concrete examples:

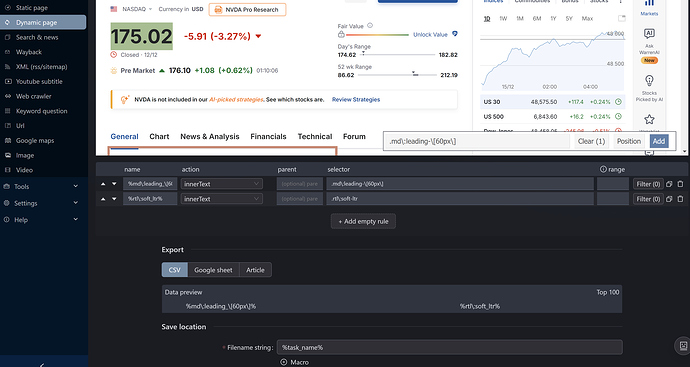

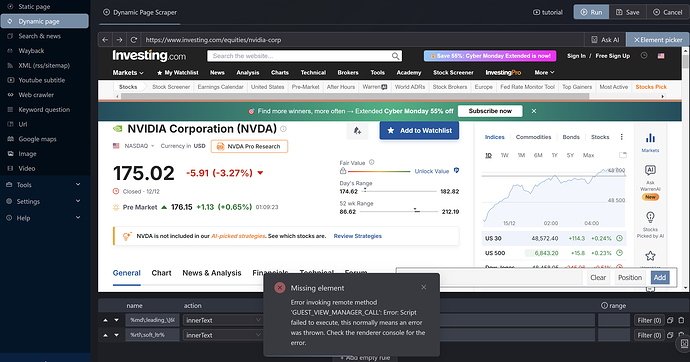

- Page loads visually

- When trying to add selectors, it throws errors

- Selectors never capture the actual text (empty or incorrect values)

- Returns a 403 error

- Likely blocking bots / automation tools

- Yahoo Finance

https://finance.yahoo.com/quote/NVDA/

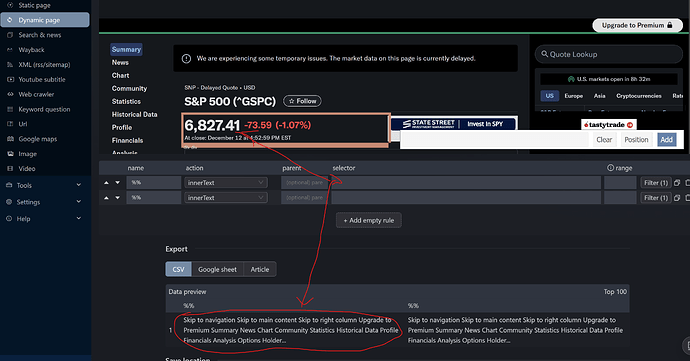

- Page loads, but element picker cannot capture any real content

- Any selected element results in empty selectors / no actual text

There are more websites I’d like to scrape, but many of them fail in similar ways.

I understand this is challenging because these sites use strong protections (bot detection, automation blocking, JS rendering, etc.). However, it would be very helpful if SCM could support:

- Custom User-Agent configuration

- Additional browser fingerprint options

- Better handling of JS-rendered content

- Anti-bot mitigation options

Some scrapers are experimenting with open-source AI browser agents, which may provide ideas or inspiration:

- https://browser-use.com/

- GitHub - browser-use/browser-use: 🌐 Make websites accessible for AI agents. Automate tasks online with ease.

I’m not suggesting copying these tools directly, but they may offer useful concepts for improving dynamic scraping reliability.

It would be good to know how to make the Dynamic Page Scraper work on the websites mentioned above.

Thank you