Is it possible to integrate Scrapeowl Proxy in SCM to use for Google map/other scraping.

Actually the Scrapeowl is scraping Api and is very cheap compared to using residential proxies which cost a lot more.

You may please see if it can be integrated in scm

Got it, thanks. There was a similar request to use serp api as well.

Actually scrapeowl is much cheaper and provide more options

Features of Serp API:

- Search engine results from Google, Bing, Yahoo, and Yandex

- Supports various search types (web, images, news, shopping, etc.)

- Provides location-based search results

- Offers search analytics and SERP features data

- Supports multiple languages and countries

- Provides API libraries for various programming languages

Features of ScrapeOwl API:

- Web scraping service for extracting data from websites

- Supports scraping of various types of data (text, images, links, etc.)

- Handles JavaScript rendering and AJAX content

- Offers proxy rotation and IP geotargeting

- Provides API libraries for various programming languages

- Offers a browser extension for easy data extraction

Comparison between Serp API and ScrapeOwl API:

- Serp API focuses on providing search engine results, while ScrapeOwl API specializes in web scraping and data extraction from websites.

- Serp API offers search results from multiple search engines, while ScrapeOwl API can scrape data from any website.

- Serp API provides location-based search results and search analytics, while ScrapeOwl API offers proxy rotation and IP geotargeting for web scraping.

- Both services offer API libraries for various programming languages, making integration easier.

- Serp API is suitable for applications that require search engine data, while ScrapeOwl API is ideal for extracting specific data from websites.

- Serp API has a focus on search-related features, while ScrapeOwl API specializes in handling complex web scraping scenarios, such as JavaScript rendering and AJAX content.

Serp API:

- Basic Plan: $50/month for 5,000 searches

- Business Plan: $100/month for 12,000 searches

- Business Pro Plan: $200/month for 30,000 searches

- Enterprise Plan: Custom pricing for high-volume needs

- Pay-as-you-go: $0.012 per search

- Free trial: 100 searches

ScrapeOwl API:

- Hobby Plan: $29/month for 200,000 API credits

- Startup Plan: $99/month for 1,000,000 API credits

- Business Plan: $249/month for 3,000,000 API credits

- Enterprise Plan: Custom pricing for high-volume needs

- Pay-as-you-go: Starts at $0.0035 per API credit

- Free trial: 1,000 API credits

Comparison:

- Serp API’s pricing is based on the number of searches, while ScrapeOwl API’s pricing is based on API credits.

- ScrapeOwl API offers more affordable entry-level pricing with its Hobby Plan at $29/month, compared to Serp API’s Basic Plan at $50/month.

- Serp API’s pay-as-you-go rate is $0.012 per search, while ScrapeOwl API’s pay-as-you-go rate starts at $0.0035 per API credit.

- ScrapeOwl API provides a higher number of requests in their monthly plans compared to Serp API’s search limits.

- Both services offer a free trial, with Serp API providing 100 searches and ScrapeOwl API offering 1,000 API credits.

- Serp API’s pricing is more suitable for applications that require a lower number of searches, while ScrapeOwl API’s pricing is more cost-effective for applications that need a higher volume of web scraping requests.

And if someone use Zimmwriter then can get the entry package of scrapeowl is just $5 with 10k API credits including premium proxy credits

Hmm interesting, thanks for the detailed break down.

Do you have a scrape owl api key? If you can share it it will help with implementation.

But since you can do all the scraping with scrape owl, where do you want SCM to work with it?

Scrapeowl offers a free trial without a credit card with 1000 API credits.

https://app.scrapeowl.com/register

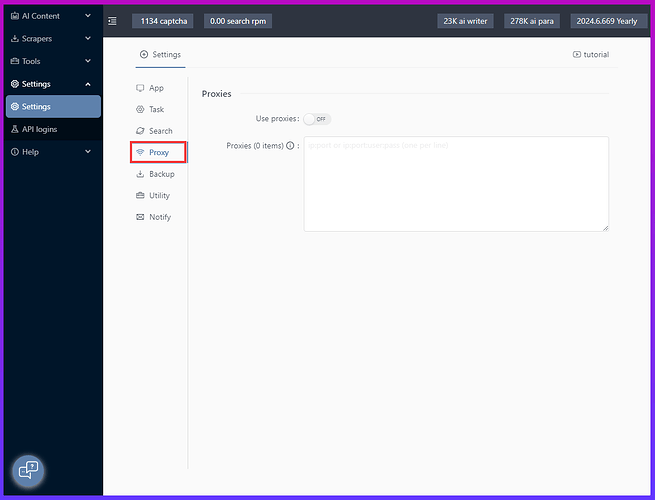

If this can be implemented in every function under scrapers in SCM, of course with an option to switch it on or off if someone wants to scrap without using it.

I hope this feature request will be considered. Will reduce the cost of monthly proxy expense and the data amount limitation

Thanks

Currently writing a bunch of tutorials and improving the workflow and ui

But will get around to this