I’d like to periodically scrape posts that contain certain keywords in X on a weekly basis, is there a way to store cookies?

For example, the following functionality.

The dynamic scraper browser window does keep cookies.

Do you need set cookies manually?

Anything you do in the dynamic scraper window eg login etc will keep the same login status when the dynamic scraper is re run.

I rarely store cookies in my browser for privacy reasons.

In particular, I do not use X in my main browser because I have multiple accounts, and I use multi-profile products such as the following

https://wavebox.io/

So it is more convenient for users like me to give (or not use) a separate cookie for each scraping.

Does the browser within SCM match the default browser?

Also, do you want to automatically generate a different browser profile for each scraping?

For example, if you have multiple X accounts and you want to create a scraping workflow for X that is different from the workflow for scraping X logged in with account A, you will automatically be logged in as account A. Or is it treated as a new profile like a guest profile?

I should have tried it myself and commented.

I tried it and it seems that the login information for the default browser is irrelevant.

Also, the newly created X scraping workflow reflected the login information of the previously created X account.

I would love to see you add a way to make the default a private browser and add cookies, as I don’t think we can handle this as it is now if we want to log in for another X account or if we have multiple accounts, even if they are not X.

If there is a way to address this at this time, please let me know.

+1 for add cookie manually, save time doing otp based login.

Yes as you discovered, the browser in SCM does not share cookies with the system browser.

Its possible to

- Give each browser window in SCM its own cookie pool ie partition

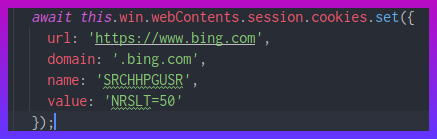

- Allow you to set cookies using JSON eg

Do you want to use it to set different X log ins per run?

Give me an example of what you want to achieve and the cookies you want to set and I can find a way to build it into the dynamic scraper

It is per workflow rather than per run.

I would simply like a new cookie to be automatically assigned for each dynamic scraper workflow.

In other words, it would be like Chrome starting with a new profile each time.

Does this make sense?

That makes sense.

I can add a cookie box that sets the cookie on each run

Do you have an example of a cookie you want to set?

Zerowork, an automation product, recommends the use of the following extensions

They appear to be safe for use.

Adding cookies manually usually means going into incognito mode, right?

I can’t add extensions to Chrome browser in SCM.

Instead you need to give me a json object to set cookies.

Its not incognito, but we can set unique profile for each run that keeps cookies seperate.

Profiles mean cookies can persist forever, or they clear automatically when SCM app is closed.

Its like having multiple incognito browsers each with their own cookie storage.

If you give me example of cookie you want to set I can figure out what ui we need.

I think it would be fine if the cookie is retrieved by the extension in a separate browser that we use.

In SCM, we only need to be able to save cookies.

Yep correct, just need you to give me sample of cookies.

Say you use above tool, what is output?

That way we can do quick copy paste from cookie tool into SCM.

Is there any privacy issue for me if I give you the value of the cookie?

I sent sample cookies via chat.

Let me add the cookie box…

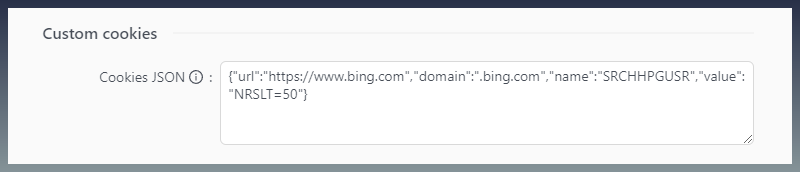

Added box in dynamic scraper:

You can paste in JSON string object of cookies to set.

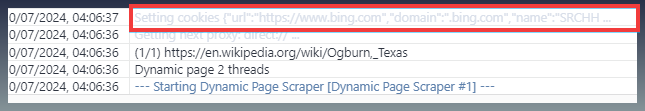

When running

There is a log when parsing cookies

Excellent! Thanks!