For some reason I never get over 120-130 results for any queries even if I set it at 999. Any idea on how to get more results? Should I use proxies?

Thanks

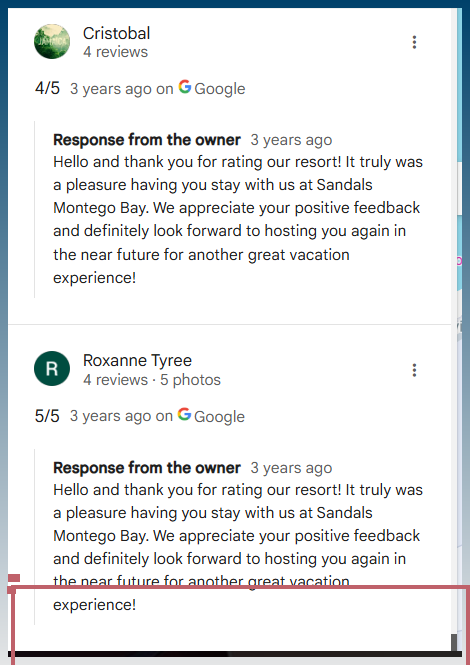

Having a look now, so far the scrolling code for reviews isn’t consistent.

On closer inspection the review scroll code wasn’t working correctly for hotels

eg:

https://www.google.com/maps/place/Sandals+Montego+Bay/@18.509117,-77.9042467,17z/data=!4m11!3m10!1s0x8eda2a2030936acb:0x616676dd47c8ee16!5m2!4m1!1i2!8m2!3d18.509117!4d-77.9042467!9m1!1b1!16s%2Fg%2F1tnjkmmv?hl=en&entry=ttu&g_ep=EgoyMDI1MDMyMy4wIKXMDSoASAFQAw%3D%3D

This has been fixed in the next update.

The maximum review count Google maps seems to want to load is around ~300.

Not sure if this is cause other reviews are in other languages or because that is the max.

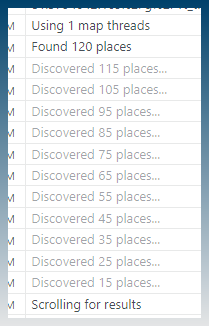

You can see in screenshot below, no more reviews are being loaded.

Oops sorry, I meant limited results, not reviews.

The amount of results if I type for example ‘restaurant in Montreal’ or ‘lawyers in Toronto’ never goes over 120-130, although there’s clearly more listed.

Understood. Let me have another look.

So you want more place results.

That’s correct, thanks.

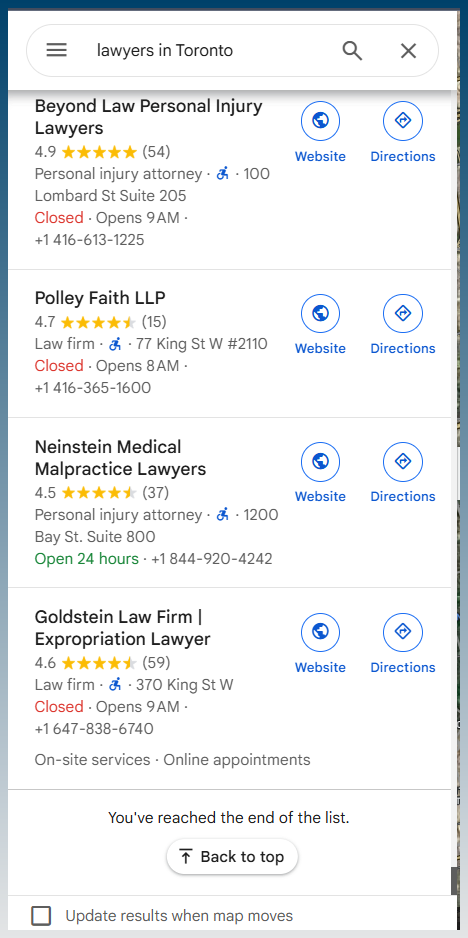

I had a closer look on the Google maps site, although the result list appears as an infinite scroll, it actually stops after ~120 results.

I scrolled until I saw the ‘back to top’

Then I counted how many results

Around 120.

So this is a Google maps limitation.

The workaround is to use more granular search.

Instead of just ‘lawyers in Toronto’ you might need to give it extra keyword searches like ‘attorney in toronto’ etc

Or if its a country wide search break the keywords into cities ‘lawyer in toronto’ ‘lawyer in quebec’ etc

SCM keeps a list of places its visited, so any duplicates should be ignored when adding multiple searches.

I see, I had a feeling that it was due to Google limitation. I was using ‘add from google map’ and typing the query there but I will try your workaround, it seem like a time saver.

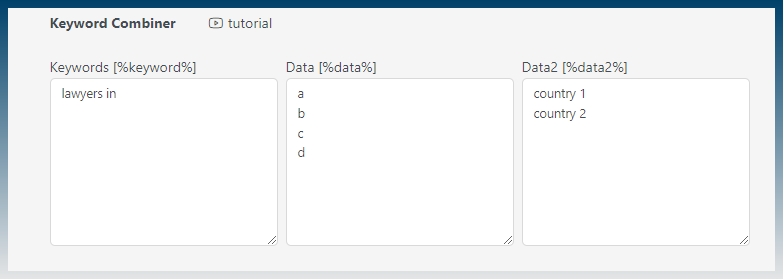

Good tips also to go more granular, you could go deeper and use also all the neighborhoods in a particular city to have more results.

I guess the ‘combine keywords’ tool in SCM will be very useful for that to create list of queries to search.

Thanks for your help

On another note, if I want to run multiple threads with scraping proxies, do you have any suggestion or know what’s the best settings to scrape Google map like a boss ?

Thanks

Edit: After looking your ‘Settings’ tutorial, I see that proxies are not used for scraping. I guess you have to use one of these API services Scrapeowl or Serpapi to accomplish that.

I noticed this as well. I tried scraping “pizza Orlando, FL” .. with 50 limit … there are HUNDREDS of pizza GM listings in Orlando that I was able see manually, but only 37 were found by SCM.

Proxies are enabled for Google maps!

Need to try it with extra keywords, or add the query via a zoomed out map instead of just using keyword search.

Okay, i tried it with zoomed-out map with 150 limit and this time I got less than before with 34 listings. (There are 200+ pizza places in Orlando) I also tried with more/different keywords and different IPs .. each time always 30-40 listings .. With 150 limit, Most I ever seen was 55 … Least I got was 25 listings for “restaurant in Orlando FL”, which is ridiculously low number since Orlando has more restaurants than people .. ![]()

The problem appears to happen during the “scrolling for results” part of the log .. it seems to cap out after a few scrolls if it takes too long. You might need to increase your timeout or allow us to set it.

PS: as I said in other thread, there is another scraper where they can pull reviews on each listing reliably and they can get at least 100 listings per keyword/town (without showing a visible window) .. so we know it is certainly technically possible.

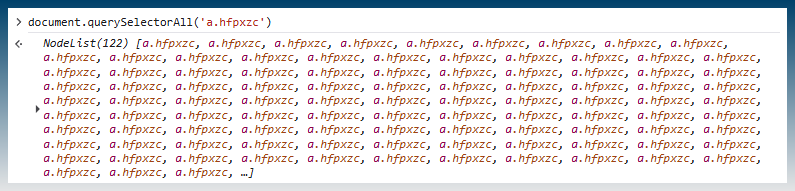

It scrolls down the page and when the count of results is the same for 2 scroll events it exists early.

Might be possible to add some extra wait time in between.

Need to have the windows open to see why its not working.

okay .. now it caps out at 120 … which I believe is Google’s cap per GM query. Great job. It would be great if users could set the timeouts instead of hardcoding them … just in case Google changes them in the future similar to .. see how Scrapebox does it:

Now all we need to do is get the reviews working properly!! ![]()

Right now in the code it scrolls, then waits 4secs, then it scrolls again and waits 4 secs.

So 2 sets of scrolls.

If we want it to work better, I would add 1 extra set of scrolls.

But lets see if the changes now are good enough or require some extra tweaking to get right.

Right now in the code it scrolls, then waits 4secs, then it scrolls again and waits 4 secs. So 2 sets of scrolls.If we want it to work better, I would add 1 extra set of scrolls.But lets see if the changes now are good enough or require some extra tweaking to get right.

Yes .. i ran some tests and listings are coming in alot better .. reviews are slightly better than before but still struggling. Can you open it up to allow us to tweak the variables, # scrolls, delays, timeouts etc … for both listings and reviews so we could tweak it ourselves and see which values work best. That’s what other scrapers like scrapebox do .. because these change as google changes their platform.