This is a limitation of the groq AI models, the input token length is to small to correctly allow you to give it 5 articles worth of context.

Solution 1

Use the headings of the article instead.

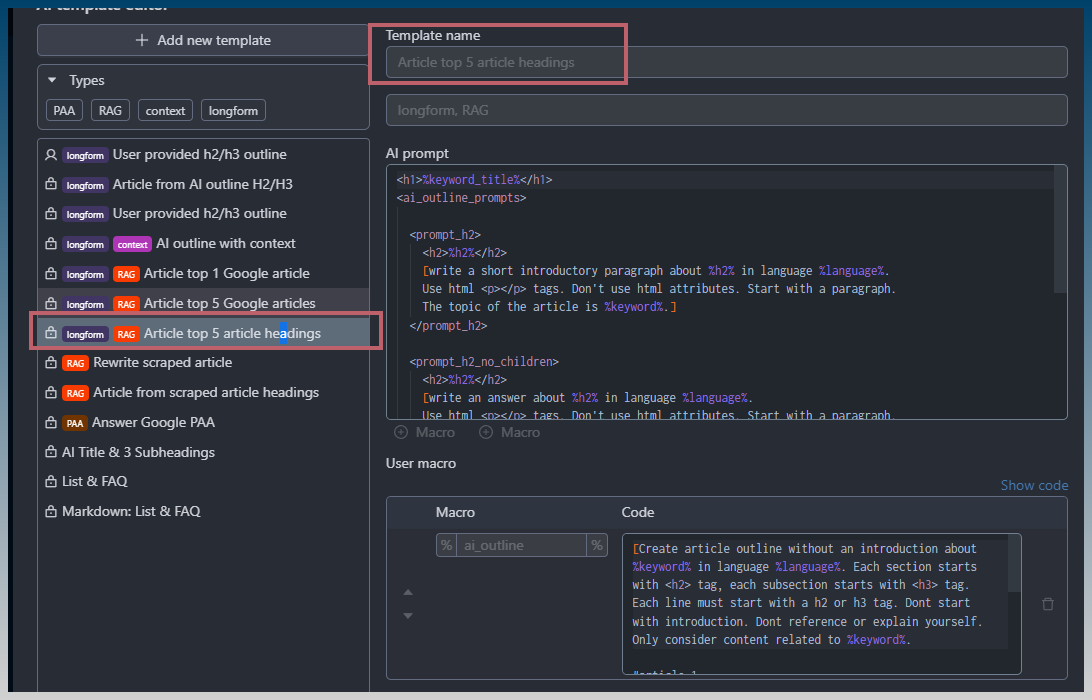

Load the RAG top 5 article headings.

Solution 2

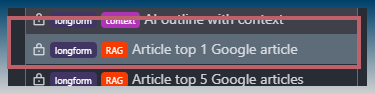

Use just top 1 article, not the top 5

Solution 3

Switch to a model with a larger context window.