What is the default scraping interval for dynamic pages?

Is it set to, for example, run randomly between 1 and 5 seconds?

It’s not random interval. If it’s 5s it will always wait that.

Is there any need to randomise?

Is there a problem with consistent intervals?

In general not really.

Once you are on the page, it takes a random amount of time to load that page, scrape the content on that page, then write it to hard drive and for SCM to clean up and do it all again.

The scraping wait time is consistent, but the time to scrape and finish a page is always random.

Sites can rate limit you to stop you doing essentially a DOS attack.

They generally don’t care if you are accessing the server 1 second apart or 3 seconds apart etc. ![]() ie its not the interval of requests that matters but the amount of request over a time period.

ie its not the interval of requests that matters but the amount of request over a time period.

If you are worried about being detected as a ‘bot’ most sites will throw up javascript checks and captchas instead of checking your access intervals. I know cloudflare can check your browser to see if you are a bot. However its just a simple javascript check from what I know, which will stop the static scraper but not dynamic scraper most times.

However rate limits is how they stop you at the end.

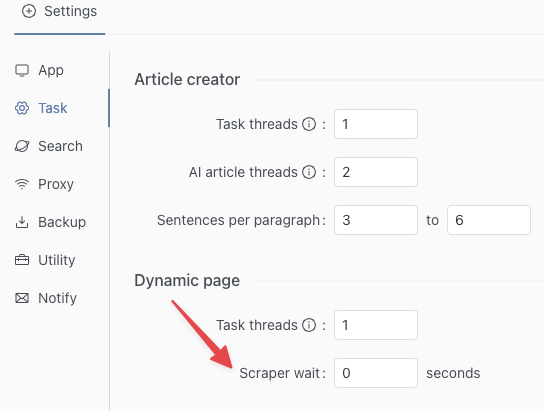

It adds the wait time between opening a new browser.

Its optional setting.

If you are trying to scape 500 urls from the same domain, adding this wait time will slow down number of requests to same server.

But the wait time is fixed, not randomized

When is it necessary to add waiting time?

Only if you get any form of 403 after access same domain multiple times.

Its rare that you would need it though.

Even using task to access 500 urls on same domain, the fact that each page has to fully load and then wait for elements to appear mean you can’t really go through more than 1 url everyone 5 secs

Understood.